Briefing report No. 7

by the European Digital Education Hub’s squad on artificial intelligence in education Authors: Jessica Niewint-Gori, Dara Cassidy, Riina Vourikari, Francisco Bellas, Lidija Kralj.

The focus of this report is to explore the potential of a number of related areas in the domain of teaching with artificial intelligence (AI) – assessment, feedback and personalisation. It builds on the previous briefing reports, each of which have explored different facets of the use of AI in education. One of the most touted benefits of AI for education is the potential it offers for personalisation – the delivery of education

The focus of this report is to explore the potential of a number of related areas in the domain of teaching with artificial intelligence (AI) – assessment, feedback and personalisation. It builds on the previous briefing reports, each of which have explored different facets of the use of AI in education. One of the most touted benefits of AI for education is the potential it offers for personalisation – the delivery of education

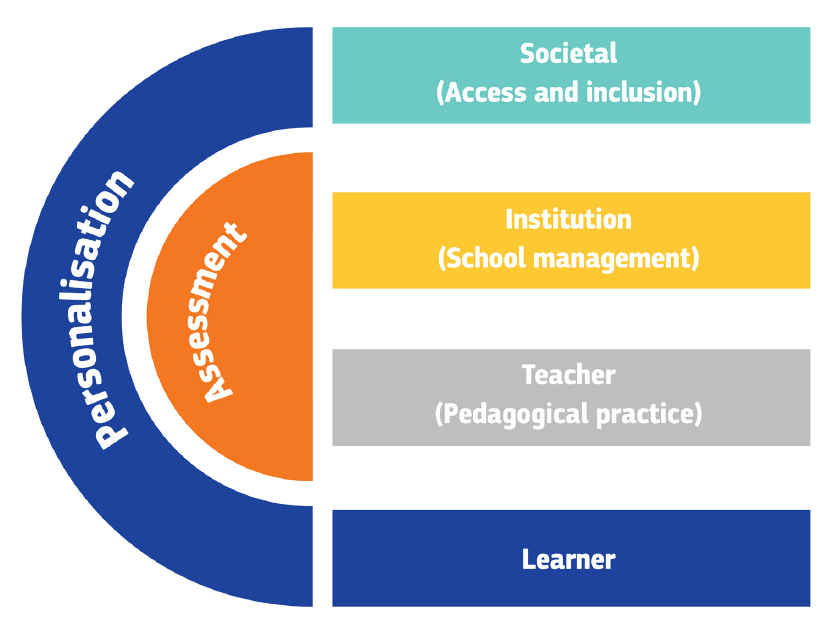

interventions that are tailored to the specific needs of individual learners. This may be manifest in a variety of ways, including via adaptive learning and intelligent tutoring systems. At the core of this capacity is the ability to assess a learner’s mastery of a particular concept, identify gaps in knowledge or areas for improvement, and deliver feedback or resources to address that gap (Phillips et al, 2020). The ability to

harness AI to create high quality assessments, feedback and tailored resources has the potential to deliver benefits for individual students, teachers, education institutions, and society as a whole.

In considering this potential, it is important to consider education in all its complexity and be mindful of the potential risks as well as the benefits. As detailed in briefing report 5: The Influence of AI on Governance in Education, the draft EU Artificial Intelligence Act proposes a risk-based approach to AI focused on four risk levels: unacceptable, high, limited, and minimal. Throughout this report, we aim to draw attention to the

potential for risk as we explore how AI’s capacity for personalisation might deliver benefits at many levels (learner, teacher, institution, using the same distinction as the Wayne Holmes et al, 2022 report) of the education system and ultimately at the broader societal level.

…

Briefing report ends with Recommendations by the Squad

AI holds great promise for enhancing education, but it should be implemented responsibly to ensure the protection of students’ rights and interests. Proper checks and balances, transparency, and human oversight are key to mitigating the potential risks associated with AI in education. AI should be used to complement and enhance existing pedagogical practices rather than replace them. AI algorithms, especially in education, should be designed to produce understandable and interpretable outcomes. Explainable AI aims to make AI decision-making processes transparent to understand how the system arrived at its conclusions, which is particularly crucial in areas like assessment. Despite the use of AI for automating various processes, human oversight should still be a significant part of the system. Educators should have the final say in grading or making decisions that significantly affect students’ academic standing. AI systems must respect and protect the privacy of the students. Data handling procedures should comply with privacy laws and regulations, ensuring the confidentiality and security of sensitive student information. Biases can influence the fairness of the system and have serious implications for all stakeholders in education, so efforts should be made to identify and mitigate biases in AI algorithms. Also, if the system fails or produces erroneous results, there should be mechanisms in place to identify the cause of the issue and rectify it. To ensure the accuracy of the performance of AI systems, they should be regularly monitored and evaluated to identify and address any

emerging issues promptly and to help to ensure fairness and effectiveness.

Read whole report No. 7 “Teaching with AI – Assessment, Feedback and Personalisation” and find all other briefing reports by European Digital Education Hub’s squad on artificial intelligence in education in the post “Learning journey for, about, and with AI“.

We also invite you to join the European Digital Education Hub.